DARPA ANSR

DARPA ANSR

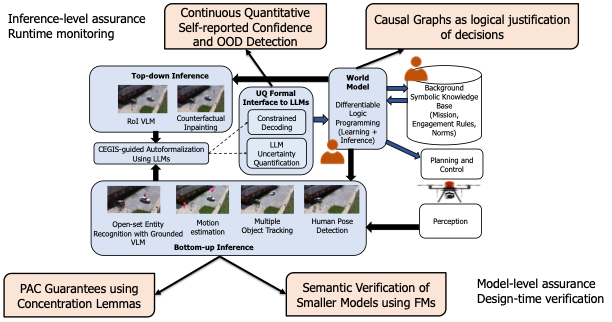

Abstract: To successfully incorporate autonomous systems into their missions, military operators must have confidence that those systems will operate safely and perform as intended. DARPA is motivating new thinking and approaches to artificial intelligence development to enable high levels of trust in autonomous systems through the Assured Neuro Symbolic Learning and Reasoning (ANSR) program. ANSR seeks breakthrough innovations in the form of new, hybrid AI algorithms that integrate symbolic reasoning with data-driven learning to create robust, assured, and therefore trustworthy systems. ANSR defines a system as trustworthy, if it is: Robust to domain-informed and adversarial perturbations; Supported by an assurance framework that creates and analyzes heterogenous evidence towards safety and risk assessments; and Predictable with respect to some specification and models of fitness. Advances in assurance technologies, including formal and simulation-based approaches, have helped accelerate identification of failure modes and defects of machine learning (ML) algorithms. Unfortunately, the ability to repair defects in state-of-the-art ML remains limited to retraining, which is not guaranteed to eliminate defects or to improve the generalizability of ML algorithms. Further, while the runtime assurance architecture (e.g. monitoring and recovery) ensures operational safety, frequent invocations of fallback recovery, triggered by brittleness and generalizability of ML, compromises the ability to accomplish a mission. ANSR hypothesizes that several of the limitations in ML today are a consequence of the inability to incorporate contextual and background knowledge, and treating each data set as an independent, uncorrelated input. In the real world, observations are often correlated and a product of an underlying causal mechanism, which can be modeled and understood. ANSR also posits that hybrid AI algorithms capable of acquiring and integrating symbolic knowledge and performing symbolic reasoning at scale will deliver robust inference, generalize to new situations, and provide evidence for assurance and trust. SRI is developing TrinityNS - a novel neuro-symbolic AI framework to address ANSR challenges by combining foundation models, logic programming, and multimodal generative AI techniques.